PlanningAlerts is dead, long-live PlanningAlerts

Posted: October 10, 2011 Filed under: hyperlocal, local government, mapping, open data, openlylocal | Tags: development, hyperlocal, local, local data, Local Democracy, local government, Open Government, planning, Planning Applications, PlanningAlerts 26 CommentsOne of the first and best examples of how data could make a difference to ordinary people’s lives was the inspirational PlanningAlerts.com, built by Richard Pope, Mikel Maron, Sam Smith, Duncan Parkes, Tom Hughes and Andy Armstrong.

In doing one simple thing – allowing ordinary people to subscribe to an email alert when there was a planning application near them, regardless of council boundaries – it showed that data mattered, and more than that had the power to improve the interaction between government and the community.

It did so many revolutionary things and fought so many important battles that everyone in the open data world (and not just the UK) owes all those who built it a massive debt of gratitude. Richard Pope and Duncan Parkes in particular put masses of hours writing scrapers, fighting the battle to open postcodes and providing a simple but powerful user experience.

However, over the past year it had become increasingly difficult to keep the site going, with many of the scrapers falling into disrepair (aka scraper rot). Add to that the demands of a day job, and the cost of running a server, and it’s a tribute to both Richard and Duncan that they kept PlanningAlerts going for as long as they did.

So when Richard reached out to OpenlyLocal and asked if we were interested in taking over PlanningAlerts we were both flattered and delighted. Flattered and delighted, but also a little nervous. Could we take this on in a sustainable manner, and do as good a job as they had done?

Well after going through the figures, and looking at how we might architect it, we decided we could – there were parts of the problem that were similar to what we were already doing with OpenlyLocal – but we’d need to make sustainability a core goal right from the get-go. That would mean a business plan, and also a way for the community to help out.

Both of those had been given thought by both us and by Richard, and we’d come to pretty much identical ideas, using a freemium model to generate income, and ScraperWiki to allow the community help with writing scrapers, especially for those councils didn’t use one of the common systems. But we also knew that we’d need to accelerate this process using a bounty model, such as the one that’s been so successful for OpenCorporates.

Now all we needed was the finance to kick-start the whole thing, and we contacted Nesta to see if they were interested in providing seed funding by way of a grant. I’ve been quite critical of Nesta’s processes in the past, but to their credit they didn’t hold this against us, and more than that showed they were capable and eager to working in a fast, lightweight & agile way.

We didn’t quite manage to get the funding or do the transition before Richard’s server rental ran out, but we did save all the existing data, and are now hard at work building PlanningAlerts into OpenlyLocal, and gratifyingly making good progress. The PlanningAlerts.com domain is also in the middle of being transferred, and this should be completed in the next day or so.

We expect to start displaying the original scraped planning applications over the next few weeks, and have already started work on scrapers for the main systems used by councils. We’ll post here, and on the OpenlyLocal and PlanningAlert twitter accounts as we progress.

We’re also liaising with PlanningAlerts Australia, who were originally inspired by PlanningAlerts UK, but have since considerably raised the bar. In particular we’ll be aiming to share a common data structure with them, making it easy to build applications based on planning applications from either source.

And, finally, of course, all the data will be available as open data, using the same Open Database Licence as the rest of OpenlyLocal.

Videoing council meetings redux: progress on two fronts

Posted: February 22, 2011 Filed under: hyperlocal, local government, openlylocal | Tags: Councils, data, hyperlocal, local, Local Democracy, local government, Open Government, youtube 19 CommentsTonight, hyperlocal bloggers (and in fact any ordinary members of the public) got two great boosts in their access to council meetings, and their ability to report on them.

Windsor & Maidenhead this evening passed a motion to allow members of the public to video the council meetings. This follows on from my abortive attempt late last year to video one of W&M’s council meeting – see the full story here, video embedded below – following on from the simple suggestion I’d made a couple of months ago to let citizens video council meetings. I should stress that that attempt had been pre-arranged with a cabinet member, in part to see how it would be received – not well as it turned out. But having pushed those boundaries, and with I dare say a bit of lobbying from the transparency minded members, Windsor & Maidenhead have made the decision to fully open up their council meetings.

Separately, though perhaps not entirely coincidentally, the Department for Communities & Local Government tonight issued a press release which called on councils across the country to fully open up their meetings to the public in general and hyperlocal bloggers in particular.

Councils should open up their public meetings to local news ‘bloggers’ and routinely allow online filming of public discussions as part of increasing their transparency, Local Government Secretary Eric Pickles said today.

To ensure all parts of the modern-day media are able to scrutinise Local Government, Mr Pickles believes councils should also open up public meetings to the ‘citizen journalist’ as well as the mainstream media, especially as important budget decisions are being made.

Local Government Minister Bob Neill has written to all councils urging greater openness and calling on them to adopt a modern day approach so that credible community or ‘hyper-local’ bloggers and online broadcasters get the same routine access to council meetings as the traditional accredited media have.

The letter sent today reminds councils that local authority meetings are already open to the general public, which raises concerns about why in some cases bloggers and press have been barred.

Importantly, the letter also tells councils that giving greater access will not contradict data protection law requirements, which was the reason I was given for W&M prohibiting me filming.

So, hyperlocal bloggers, tweet, photograph and video away. Do it quietly, do it well, and raise merry hell in your blogs and local press if you’re prohibited, and maybe we can start another scoreboard to measure the progress. To those councils who videocast, make sure that the videos are downloadable under the Open Government Licence, and we’ll avoid the ridiculousness of councillors being disciplined for increasing access to the democratic process.

And finally if we can collectively think of a way of tagging the videos on Youtube or Vimeo with the council and meeting details, we could even automatically show them on the relevant meeting page on OpenlyLocal.

Local spending data in OpenlyLocal, and some thoughts on standards

Posted: June 17, 2010 Filed under: api, local government, open data, openlylocal, semantic web | Tags: local, local data, Local Democracy, ONS, spending 3 CommentsA couple of weeks ago Will Perrin and I, along with some feedback from the Local Public Data Panel on which we sit, came up with some guidelines for publishing local spending data. They were a first draft, based on a request by Camden council for some guidance, in light of the announcement that councils will have to start publishing details of spending over £500.

Now I’ve got strong opinions about standards: they should be developed from real world problems, by the people using them and should make life easier, not more difficult. It slightly concerned me that in this case I wasn’t actually using any of the spending data – mainly because I hadn’t got around to adding it in to OpenlyLocal yet.

This week, I remedied this, and pulled in the data from those authorities that had published their local spending data – Windsor & Maidenhead, the GLA and the London Borough of Richmond upon Thames. Now there’s a couple of sites (including Adrian Short’s Armchair Auditor, which focuses on spending categories) already pulling the Windsor & Maidenhead data but as far as I’m aware they don’t include the other two authorities, and this adds a different dimension to things, as you want to be able to compare the suppliers across authorities.

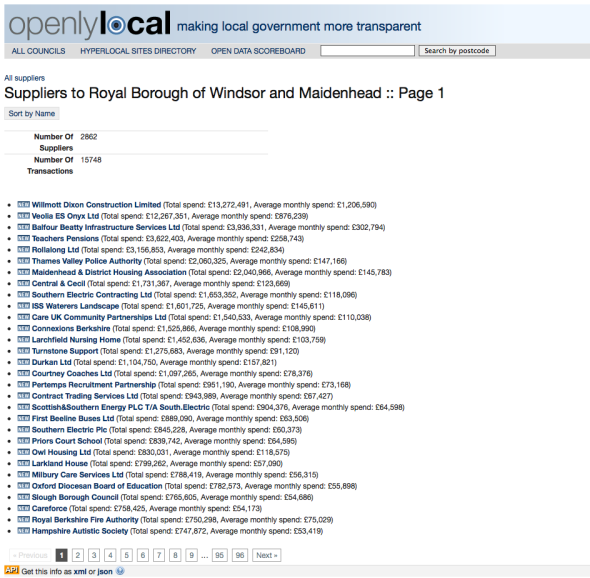

First, a few pages from OpenlyLocal showing how I’ve approached it (bear in mind they’re a very rough first draft, and I’m concentrating on the data rather than the presentation). You can see the biggest suppliers to a council right there on the council’s main page (e.g. Windsor & Maidenhead, GLA, Richmond):

Click through to more info gets you a pagination view of all suppliers (in Windsor & Maidenhead’s case there are over 2800 so far):

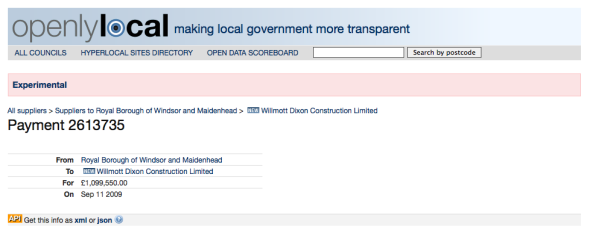

Clicking any of these will give you the details for that supplier, including all the payments to them:

And clicking on the amount will give you a page just with the transaction details, so it can be emailed to others

But we’re getting ahead of ourselves. The first job is to import the data from the CSV files into a database and this was where the first problems occurred. Not in the CSV format – which is not a problem, but in the consistency of data.

Take Windsor & Maidenhead (you should just be able to open these files an any spreadsheet program). Looking at each data set in turn and you find that there’s very little consistency – the earliest sets don’t have any dates and aggregate across a whole quarter (but do helpfully have the internal Supplier ID as well as the supplier name). Later sets have the transaction date (although in one the US date format is used, which could catch out those not looking at them manually), but omit supplier ID and cost centre.

On the GLA figures, there’ a similar story, with the type of data and the names used to describe changing seemingly randomly between data sets. Some of the 2009 ones do have transaction dates, but the 2010 one generally don’t, and the supplier field has different names, from Supplier to Supplier Name to Vendor.

This is not to criticise those bodies – it’s difficult to produce consistent data if you’re making the rules up as you go along (and given there weren’t any established precedents that’s what they were doing), and doing much of it by hand. Also, they are doing it first and helping us understand where the problems lie (and where they don’t). In short they are failing forward –getting on with it so they can make mistakes from which they (and crucially others) can learn.

But who are these suppliers?

The bigger problem, as I’ve said before, is being able to identify the suppliers, and this becomes particularly acute when you want to compare across bodies (who may name the same company or body slightly differently). Ideally (as we put in the first draft of the proposals), we would have the company number (when we’re talking about a company, at any rate), but we recognised that many accounts systems simply won’t have this information, and so we do need some information that helps use identify them.

Why do we want to know this information? For the same reason we want any ID (you might as well ask why Companies House issues Company Numbers and requires all companies to put that number on their correspondence) – to positively identify something without messing around with how someone has decided to write the name.

With the help of the excellent Companies Open House I’ve had a go at matching the names to company numbers, but it’s only been partially successful. When it is, you can do things like this (showing spend with other councils on a suppliers’ page):

It’s also going to allow me to pull in other information about the company, from Companies House and elsewhere. For other bodies (i.e. those without a company number), we’re going to have to find another way of identifying them, and that’s next on the list to tackle.

Thoughts on those spending data guidelines

In general I still think they’re fairly good, and most of the shortcomings have been identified in the comments, or emailed to us (we didn’t explicitly state that the data should be available under an open licence such as the one at data.gov.uk, and be definitely should have done). However, adding this data to OpenlyLocal (as well as providing a useful database for the community) has crystalised some thoughts:

- Identification of the bodies is essential, and it think we were right to make this a key point, but it’s likely we will need to have the government provide a lookup table between VAT numbers and Company Numbers.

- Speaking of Government datasets, there’s no way of finding out the ancestry of a company – what its parent company is, what its subsidiaries are, and that’s essential if we’re to properly make use of this information, and similar information released by the government. Companies House bizarrely doesn’t hold this information, but the Office For National Statistics does, and it’s called the Inter Departmental Business Register. Although this contains a lot of information provided in confidence for statistical reasons, the relationships between companies isn’t confidential (it just isn’t stored in one place), so it would be perfectly feasible to release this information.

- We should probably be explicit whether the figures should include VAT (I think the Windsor & Maidenhead ones don’t include it, but the GLA imply that theirs might).

- Categorisation is going to be a tricky one to solve, as can be seen from the raw data for Windsor & Maidenhead – for example the Children’s Services Directorate is written as both Childrens Services & Children’s Services, and it’s not clear how this, or the subcateogries, ties into standard classifications for government spending, making comparison across authorities tricky.

- I wonder what would be the downside to publishing the description details, even, potentially, the invoice itself. It’s probably FOI-able, after all.

As ever, comments welcome, and of course all the data is available through the API under an open licence.

C

New feature: search for information by postcode

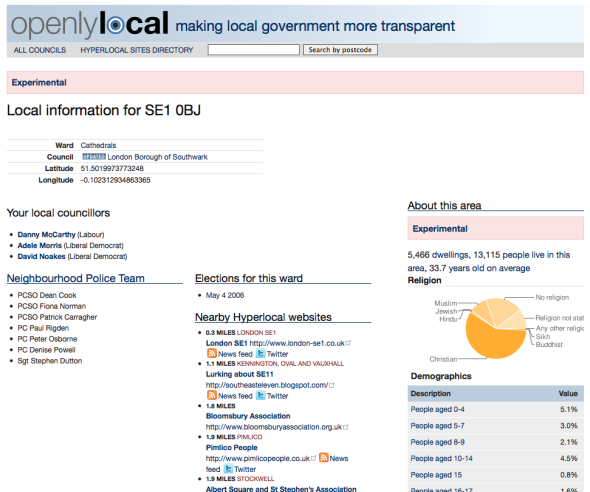

Posted: April 6, 2010 Filed under: api, hyperlocal, local government, open data, openlylocal, Uncategorized | Tags: api, Councils, data, hyperlocal, local, local government, opendata Leave a commentWhy was it important that the UK government open up the geographic infrastructure? Because it makes so many location-based things that were tortuous, almost trivial.

Previously, getting open data about your local councillors, given just a postcode, was a tortuous business, requiring multiple calls to different sites. Now, it is easy. Just go to http://openlylocal.com/areas/postcodes/%5Byourpostcodehere%5D and, bingo, you’re done.

You can also just put your postcode in the search box on any OpenlyLocal page to do the same thing. And, obviously, you can also download the data as XML or JSON, and with an open data licence that allows reuse by anybody, even commercial reuse.

There’s still a little bit of tweaking to be done. I need to match up postcodes county electoral divisions, and I’m planning on adding RDF to the data types returned. Finally, it’d be great to show the ward boundaries on a map, but I think that may take a little more work.

The GLA and open data: did he really say that?

Posted: January 8, 2010 Filed under: local government, open data, openlylocal | Tags: GLA, gov 2.0, local, London, opendata, transport 5 CommentsThe launch on Friday of the Greater London Authority’s open data initiative (aka London Datastore) was a curious affair, and judging from some of the discussions in the pub after, I think that the strangeness – a joint teleconferenced event with CES Las Vegas – possibly overshadowed its significance and the boldness of the GLA’s action.

First off the technology let it down – if Skype wanted to give a demo of just how far short its video conferencing is from prime time they did a perfect job. Boris did a great impromptu stand-up routine, looking for the world like he was still up from the night before, but the people at CES in Las Vegas missed the performance and whose images and words occasionally stuttered in to life to interrupt the windows/skype error messages.

However in between the gags Boris came out with this nugget, “We will open up all the GLA’s data for free reuse”.

What does that mean, I wondered, all their data? All that’s easy to do? Does it include info from TransportForLondon (TfL), the Metropolitan Police? To be honest I sort of assumed it was Boris just paraphrasing. Nevertheless, I thought, it could be a good stick to enforce change later on.

However then it was Deputy Mayor Sir Simon Milton’s turn to give the more scripted, more plodding, more coherent version. This was the bit where we would find out what’s really going to happen. [What you need to realise that the GLA doesn’t actually have a lot of its own data – mostly it’s just some internal stuff, slices of central government data, and grouping of London council info. The good stuff is owned by those huge bodies, such as TfL and the Met, that it oversees.

So when Steve said: “I hope that our discussions with the GLA group will be fruitful and that in the short term we can encourage them to release that data which is not tied to commercial contracts and in the longer term encourage them when these contracts come up for renewal to apply different contractual principles that would allow for the release of all of their data into the public domain“, all I heard was yada yada yada.

The next bit, however, genuinely took me by surprise:

“I can confirm today, however, that as a result of our discussions around the Datastore, TfL are willing to make raw data available through the Datastore. Initially this will be data which is already available for re-use via the TfL website, including live feeds from traffic cameras, geo-coded information on the location of Tube, DLR and Overground stations, the data behind the Findaride service to locate licensed mini-cab and private hire operators and data on planned works affecting weekend Tube services.

“TfL will also be considering how best to make available detailed timetabling data for its services and welcomes examples of other data which could also be prioritised for inclusion in the Datastore such as the data on live departures and Tube incidents on TfL’s website”

So stunned was I in fact (and many others too) we that we didn’t ask any questions when he finished talking came to it , or for that matter congratulate Boris/Simon on the steps they were taking.

Yes, it’s nothing that hasn’t been done in Washington DC or San Francisco, and it isn’t as big a deal as the Government’s open data announcement on December 7 (which got scandalously little press coverage, even in the broadsheets, yet may well turn out to be the most important act of this government).

However it is a huge step for local government in the UK and sets a benchmark for other local authorities to attain, and for the GLA to have achieved what it already has with Transport for London will only have come after a considerable trial of will, and one, significantly, that they won.

So, Simon & Boris, and all those who fought the battle with TfL, well done. Now let’s see some action with the other GLA bodies – the Met, London Development Agency, London Fire Brigade, he London Pensions Fund Authority in particular (I’m still trying to figure out its relationship to Visit London and the London Travel Watch).

Update: Video embedded below

Useful links:

- London Datastore

- List of packages that wil be available for full launch on Jan 29th

- Other blog posts on the event.

- Twitter channel